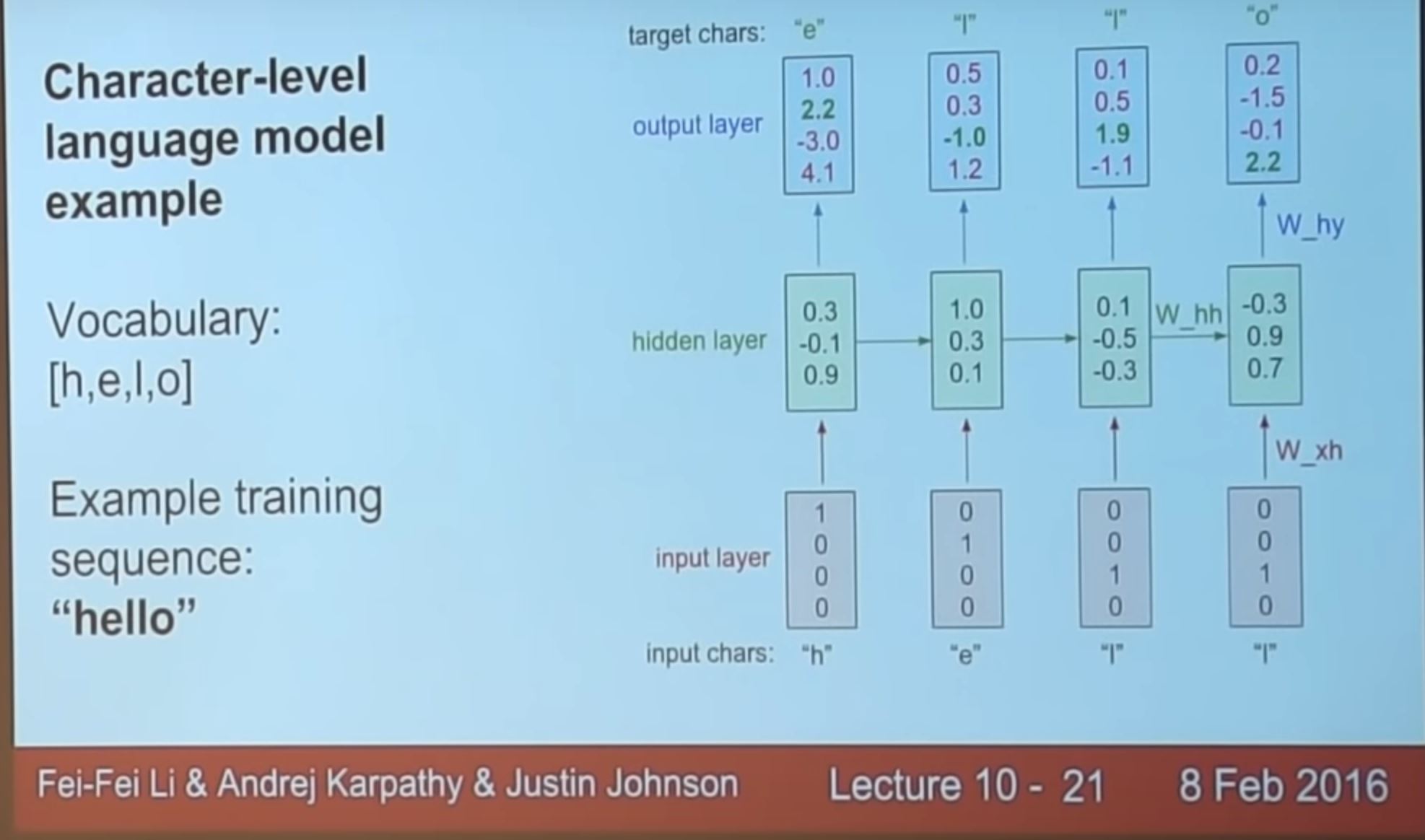

I am back working through the Stanford Recurrent Neural Network (RNN) lecture from Andrej Karpathy. I’ve been taking a lot of notes and the gears in my brain have been turning. One slide stood out today.

Initially I thought that the ‘Recurrent’ part in RNNs referred to the continuous tweaking of predictions based on new input and what was previously predicted in the sequence. Plot twist, the ‘Recurrent’ part is a result of using a Feed-Forward Neural Network at each time step with different weights! ( Unless I misunderstood).

Anyways more research is required. This is getting really interesting. You can see where I am at in the video below.