A few days ago I was trying to breakdown some diagrams but was left with a lot of unanswered questions. The diagram was modelling a vanilla Recurrent Neural Network (RNN). It was a great exercise to help me understand what was going on inside the RNN but it left me wanting specific answers. Today, through breaking down the code of an example RNN I got those answers.

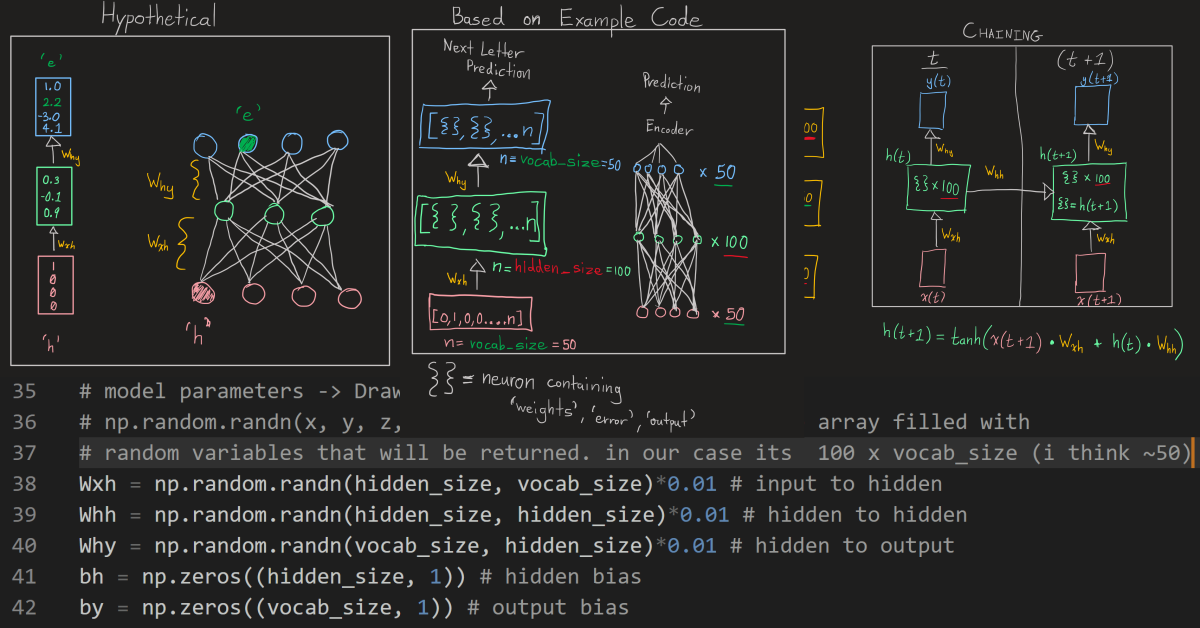

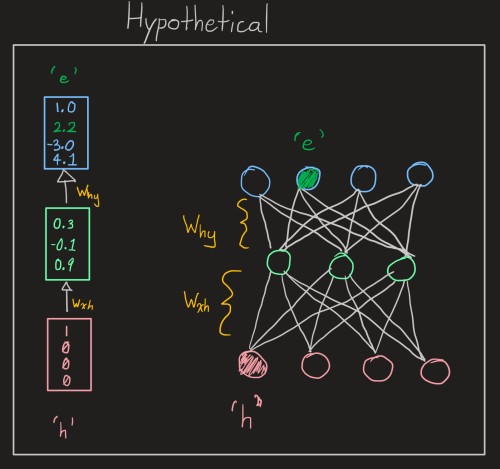

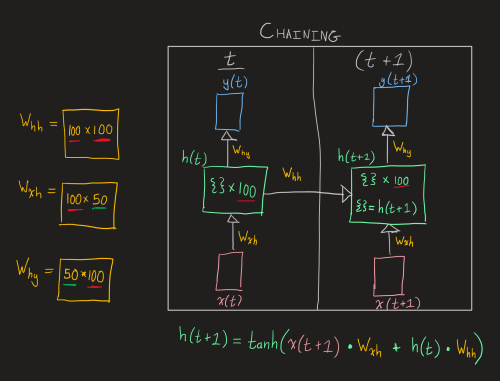

First of all – the questions (or at least one). The diagram allowed me to visualize the steps involved in the RNN sequencing but not the direct values of the Neurons in the Network itself. The weights associated with each Neuron connection was a bit unclear. In a traditional Neural Network there is a unique weight associated with every connection to a Neuron but this was not explicitly displayed in the diagram. So were the weights structured differently in an RNN or not?

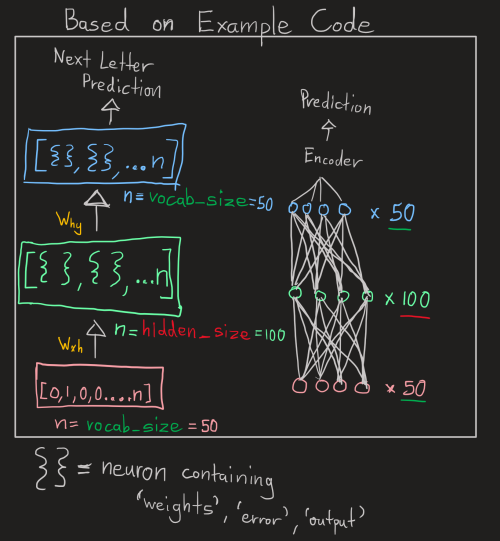

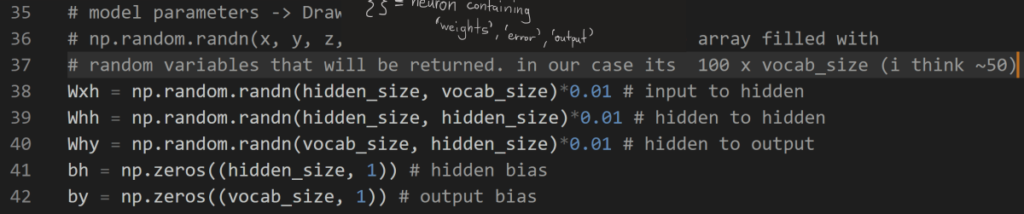

Upon reading through the example RNN’s code, I was able to confirm that unique weights were associated with every connection to a Neuron. Additionally, there were 100 Neurons in the hidden layer. So the number of weights connecting the Hidden Layer at time step ‘t’ and the Hidden Layer at time step ‘t + 1’ would be ‘100 * 100 = 10 000’. That is a really high number when I compare that to my previous Neural Networks that had only a few Neurons in each layer. Perhaps a large number of Neurons in each layer is the norm.

Overall, I would not have learned all of this if I did not get into the code. Some try to avoid diving into another person’s code but that’s the only way you will truly understand what is going on. It may take some adjusting but reverse engineering code will answer questions that diagrams never could. I’m not faulting diagrams but they are meant to provide a simplified representation nothing more. Never fear another person’s code whether it is well commented or not.

Anyways, that’s all for today. Until next time…