I am back for another round of the 100 Days of Code Challenge. I recently completed the challenge for the first time. I wrote a 100 Days Of Code Review covering the highs and lows of my journey through the challenge. The biggest take away was that I am not done and I have started round two. I left off my last challenge working on understanding how a Recurrent Neural Network (RNN) works.

These past four days I have been breaking down the update algorithm used to progress the RNN through each of it’s time steps. To put it simply – The RNN takes input like when you are typing and tries to predict the next character (like with autocomplete). Well how does the RNN know what you typed previously and how to update it’s prediction? That is the question I have been working to answer by reverse engineering the code in an example RNN.

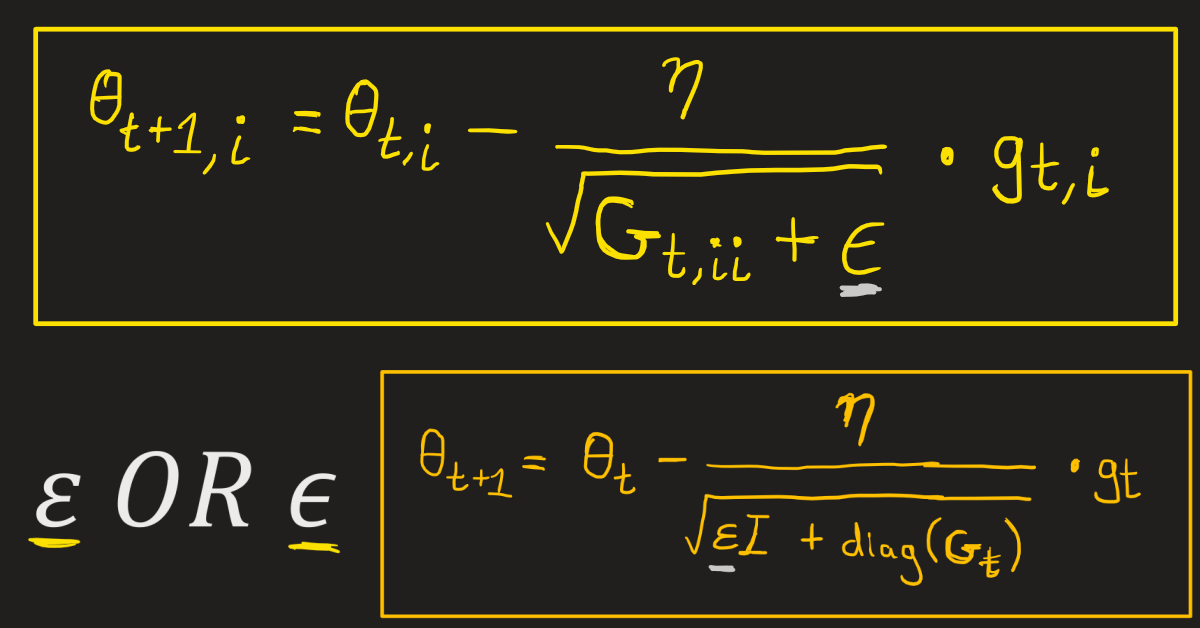

This has been quite the struggle as what seemed like a simple code breakdown has lead to me to read research papers on Adaptive Gradient Algorithms (AdaGrad), which in turn forced me to review and study Mathematical symbols to understand the formulas in the research papers, which in turn forced me to review the Greek alphabet to compare variations in symbols used to mean different things. This breakdown has taken me on quite a journey thus far.

Thankfully, I am almost finished breaking down the code and understanding exactly what is going on. The only question I have is, “What other algorithms have been used in RNN update steps besides AdaGrad?” – but that’s for another day.

Anyways, it has been quite a process and I’m super tired so until next time here is a video quickly demonstrating Adagrad. It may be a bit complex but it gives you a bit more information.