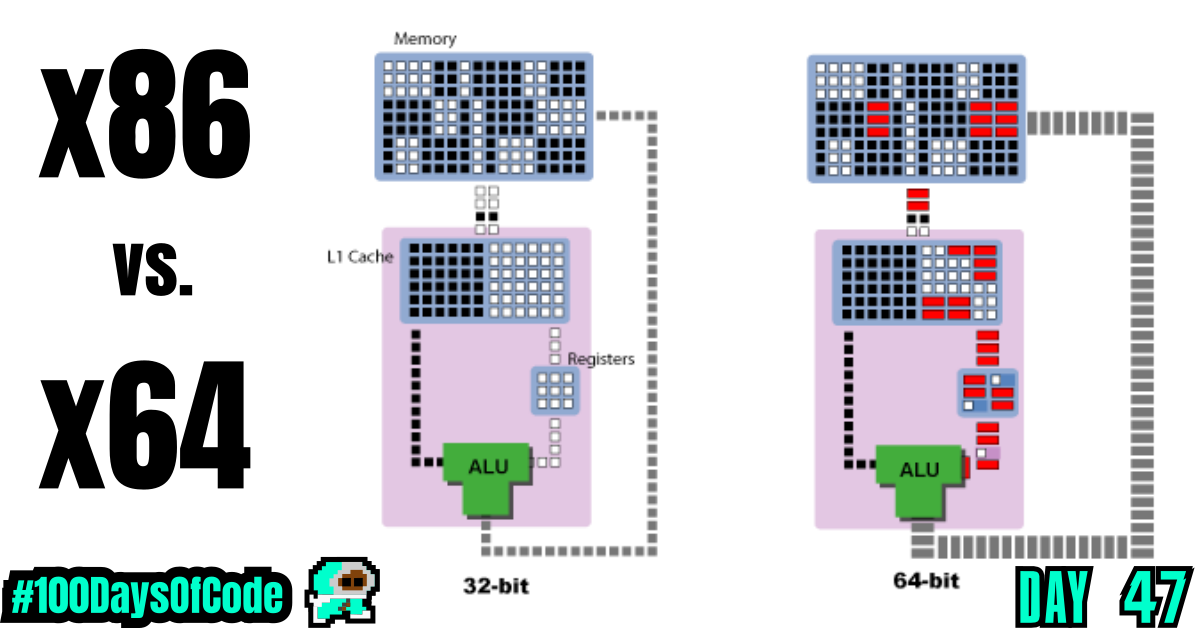

I got my groove back by going back and re-reading chapter 2. It covered Computer architecture and by going over the core components in a computer, I was able to better understand how registers play their role in building and executing functions in code. I haven’t finished re-reading the chapter and taking notes but so far it has provided me with the much needed clarity required for me to take on chapter 4. Step by step I’m improving and it feels great. Now I just need to better understand x86 bit processors and x64 bit processors.

TLDR;

Okay, so here are the highlights of what I did:

- Went through Chapter 2 on the basics of programming book. I tried to move really slowly and add notes on terminology and breakdown bytes vs. bits on an x86 processor. So far it has helped me stay focused and connect all the dots.

Notes from “Programming from the Ground Up”

Chapter 2 Notes

Structure of Computer Memory

Modern computer architecture is based off of an architecture called the Von Neumann architecture, named after its creator. The Von Neumann architecture divides the computer up into two main parts:

- The CPU (for Central Processing Unit)

- The memory

This architecture is used in all modern computers, including personal computers, supercomputers, mainframes, and even cell phones.

To understand how the computer views memory, imagine your local post office. They usually have a room filled with PO Boxes. These boxes are similar to computer memory in that each are numbered sequences of fixed-size storage locations. For example, if you have 256 megabytes of computer memory, that means that your computer contains roughly 256 million fixed-size storage locations. Or, to use our analogy, 256 million PO Boxes.

- Each location has a number,

- and each location has the same, fixed-length size.

The difference between a PO Box and computer memory is that you can store all different kinds of things in a PO Box, but you can only store a single number in a computer memory storage location. For clarity, 1 megabyte = 8,000,000 bits. 1 bit hold a single binary value of 1 or 0 (on or off). This is the most efficient method thought of to store information on a computer.

Everything must be stored in the computers memory:

- The location of your cursor on the screen

- The size of each window on the screen

- The shape of each letter of each font being used

- The layout of all of the controls on each window

- The graphics for all of the toolbar icons

- etc…

In addition to all of this, the Von Neumann architecture specifies that not only computer data should live in memory, but the programs that control the computer’s operation should live there, too. In fact, in a computer, there is no difference between a program and a program’s data except how it is used by the computer. They are both stored and accessed the same way.

The CPU

So how does the computer function? Obviously, simply storing data doesn’t do much help – you need to be able to access, manipulate, and move it. That’s where the CPU comes in.

The CPU reads in instructions from memory one at a time and executes them. This is known as the fetch-execute cycle. The CPU contains the following elements to accomplish this:

- Program Counter (used to tell the computer where to fetch the next instruction from)

- Instruction Decoder (figures out what the instruction means)

- Data bus (the connection between the CPU and memory)

- General-purpose registers (where the main action happens)

- Arithmetic and logic unit

There is no difference between the way data and programs are stored, they are just interpreted differently by the CPU. The program counter holds the memory address of the next instruction to be executed. The CPU begins by looking at the program counter, and fetching whatever number is stored in memory at the location specified.

It is then passed on to the instruction decoder. This includes what process needs to take place (addition, subtraction, multiplication, data movement, etc.) and what memory locations are going to be involved in this process. Computer instructions usually consist of both:

- the actual instruction

- the list of memory locations that are used to carry out the instruction.

Now the computer uses the data bus to fetch the memory locations to be used in the calculation. The data bus is the connection between the CPU and memory. It is the actual wire that connects them. If you look at the motherboard of the computer, the wires that go out from the memory are your data bus.

In addition to the memory on the outside of the processor, the processor itself has some special, high-speed memory locations called registers. There are two kinds of registers:

- general registers (where the main action happens)

- special-purpose registers (registers which have very specific purposes)

Addition, subtraction, multiplication, comparisons, and other operations generally use general-purpose registers for processing. However, computers have very few general-purpose registers. Most information is stored in main memory, brought in to the registers for processing, and then put back into memory when the processing is completed. special-purpose registers are registers which have very specific purposes. We will discuss these as we come to them.

Now that the CPU has retrieved all of the data it needs, it passes on the data and the decoded instruction to the arithmetic and logic unit for further processing. Here the instruction is actually executed. After the results of the computation have been calculated, the results are then placed on the data bus and sent to the appropriate location in memory or in a register, as specified by the instruction.

This is a very simplified explanation. Processors have advanced quite a bit in recent years, and are now much more complex. Although the basic operation is still the same, it is complicated by the use of:

- cache hierarchies

- superscalar processors

- pipelining

- branch prediction

- out-of-order execution

- microcode translation

- coprocessors

- and other optimizations

Conclusion

That’s all for today. If you are interested in the MIT course you can check out the video lecture I’m currently going through. The lecture is helpful but isn’t sufficient by itself. Anyways, until next time PEACE!