Today was pretty boring. Just slowly grinding away at more Big O Notation. I didn’t really feel that engaged today. Might be a bit of burnout from yesterday’s progress. Also I was kind of busy today so I didn’t spend as much time studying. Oh well, Tomorrow is another opportunity hopefully.

TLDR;

Okay, so here are the highlights of what I did:

- Read through the Big O notation section in “Cracking the Coding Interview” by Gayle L. McDowell. I went through a few example problems. They were the simpler ones that I could already solve quickly at a glance. I am hoping that by building up my process I will be better prepared to solve the more difficult problems. This was what I was taught to do when solving calculus problems. Build up a successful strategy with simpler problems so that you won’t get freaked out when you are facing more challenging problems.

- Looked into some resources for additional Big O Notation Practice Problems. I found a few. I will work on those after completing my notes from the book.

Notes on Big O Notation

Writing Big O Notation for Algorithms

Drop the Constants

It is very possible for O(N) code to run faster than O(1) code for specific inputs. Big O just describes the rate of increase. For this reason, we drop the constants in runtime. An algorithm that one might have described as O(2N) is actually O(N).

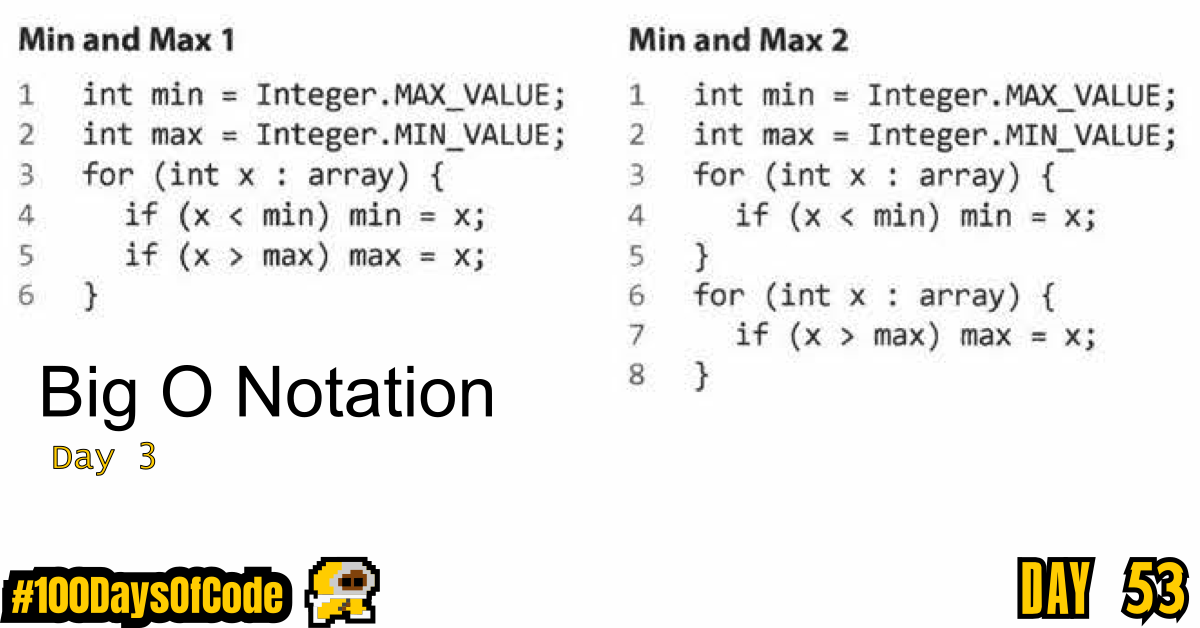

Many people resist doing this. They will see code that has two (non-nested) for loops and continue this O(2N). They think they’re being more “precise” They’re not. Compare the two sets of code for an example.

// Example 1

int min = INTEGER.MAX_VALUE;

int max = INTEGER.MIN_VALUE;

for (int x : array){

if (x < min) min = x;

if (x > max) max = x;

}

// Example 2

int min = INTEGER.MAX_VALUE;

int max = INTEGER.MIN_VALUE;

for (int x : array){

if (x < min) min = x;

}

for (int x : array){

if (x > max) max = x;

}

Which one is faster? The first one does one for loop and the other one does two for loops. But then, the first solution has two lines of code per for loop rather than one.

If you’re going to count the number of instructions, then you’d have to go to the assembly level and take into account that multiplication requires more instructions than addition, how the compiler would optimize something, and all sorts of other details.

This would be horrendously complicated, so don’t even start going down this road. Big O allows us to express how the runtime scales. We just need to accept that it doesn’t mean that O(N) is always better than O(N^2).

Conclusion

That’s all for today. If you are interested in the MIT course you can check out the video lecture I’m currently going through. The lecture is helpful but isn’t sufficient by itself. Anyways, until next time PEACE!