Today, the challenge has come. I finally got to a difficult topic. I was studying amortized time in algorithms. I have a very vague idea of the meaning and it’s applications. I found an MIT lecture on it but that didn’t really help. I feel like they might be skipping some steps when it comes to the explanations. This might take me another day or two to fully understand it depending on how long I study for.

TLDR;

Okay, so here are the highlights of what I did: KV

- Still reading through the Big O notation section in “Cracking the Coding Interview” by Gayle L. McDowell. I got stuck on calculating the Amortized time for algorithm problems. I understand the real world concept but the actual math that produces answers I don’t understand. I need to spend a lot more time on this.

- I looked up some YouTube videos to try and explain how Amortization works in computer algorithms. I found one MIT lecture that covered the topic but it seemed like it was all just going over my head. Something isn’t clicking for me. I need to re-read my notes on this so far to try and improve.

Notes on Amortized Time

Amortized Time

In many programming languages we can declare variables that hold information. In lower level languages (e.g. C, Java, Assembly, etc – I think??? lol) we need to declare the type of data that will be stored in memory and how much space it will take. In high level languages (e.g. Python, JavaScript, Ruby, etc – I think lol) this is not necessary.

This is especially important when it comes to arrays (ArrayLists). An ArrayList, or a dynamically resizing array, allows you to have the benefits of an array while offering flexibility in size. You won’t run out of space in the ArrayList since its capacity will grow as you insert elements.

An ArrayList is implemented with an array. When the array hits capacity, the ArrayList class will create a new array with double the capacity and copy all the elements over to the new array. So then how can we describe the runtime of insertion? This is a tricky question.

The array could be full. If the array contains N elements, then inserting a new element will take O(N) time. The reason it will be O(N) time is because you will have to create a new array of size 2N and then copy N elements over. So literally having to perform the insertion operation on every element in the arrayList. That is why the insertion will take O(N) time.

However, we also know that this doesn’t happen very often. The vast majority of the time insertion will be in O(1) time.

So then, for this particular situation We need a concept that takes both scenarios into account. This is what amortized time does. It allows us to describe that, yes, this worst case happens every once in a while. But once it happens, it won’t happen again for so long that the cost is “amortized”.

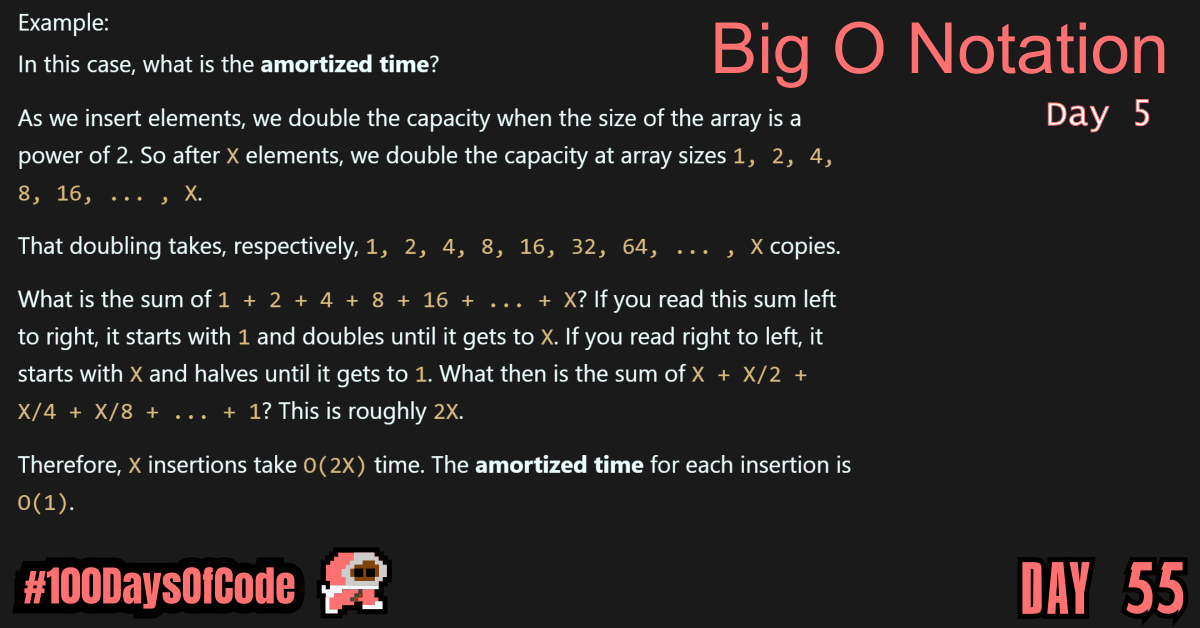

Example:

In this case, what is the amortized time?

As we insert elements, we double the capacity when the size of the array is a power of 2. So after X elements, we double the capacity at array sizes 1, 2, 4, 8, 16, ... , X.

That doubling takes, respectively, 1, 2, 4, 8, 16, 32, 64, ... , X copies.

What is the sum of 1 + 2 + 4 + 8 + 16 + ... + X? If you read this sum left to right, it starts with 1 and doubles until it gets to X. If you read right to left, it starts with X and halves until it gets to 1. What then is the sum of X + X/2 + X/4 + X/8 + ... + 1? This is roughly 2X.

Therefore, X insertions take O(2X) time. The amortized time for each insertion is O(1).

Conclusion

That’s all for today. If you are interested in the MIT course you can check out the video lecture I’m currently going through. The lecture is helpful but isn’t sufficient by itself. Anyways, until next time PEACE!