I reviewed a ton and it’s starting to make sense. While going through the 3 main methods of calculating Amortized time I had a moment of accomplishment. I don’t fully get it but I’m chipping away at this thing slowly. I had to finally look into logarithms properly. I learned them at some point in school but I have never clicked with the term. I don’t understand the true meaning of a logarithmic function. I am only familiar with it’s place in comparison to other functions and what it’s shape is on a graph. I still have some much work to do but that’s the best part about studying to improve one’s self. It isn’t about meeting a course requirement but rather it is about understanding the material.

TLDR;

Okay, so here are the highlights of what I did:

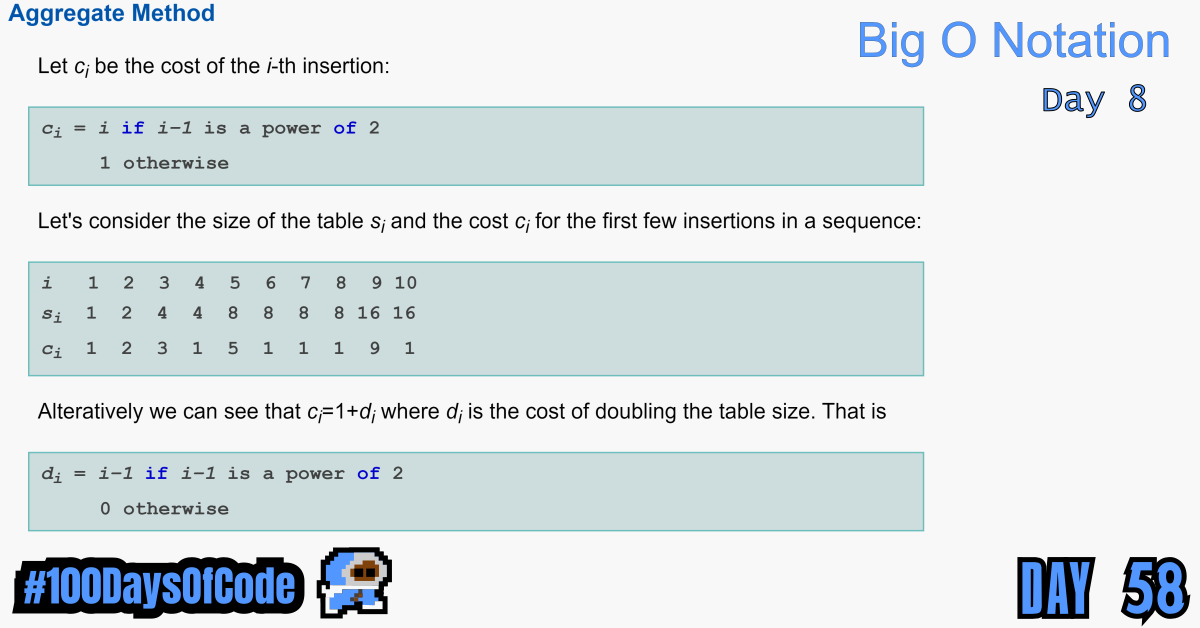

- Read through some articles in Wikipedia and some lecture notes on Amortized Analysis. Wrote some notes on the 3 main methods used to calculate Amortized run times. I am still trying to breakdown the Aggregate Method.

- I looked into logarithms. They keep showing up and I am not confident at a glance I know what they are doing in an equation or function. This does not seem right. I figure reviewing them would help me understand the math behind some of these Big O expressions and more. I hope it goes well LOL.

Notes on Amortized Time

In the case of an Arraylist needing to increase it’s size, we can see that as the size of the Arraylist increases, The number of times we need to increase the size of the Arraylist decreases. This is based on the assumption that the Arraylist starts off at a small size (e.g. a size of 4). As we insert values into that Arraylist it’s size will increase to accommodate the new values. In most cases the size increase is in a doubling action (e.g. The Arraylist will go from a size of 4 to a size of 8).

Well, if the size doubles from 4 -> 8 when we try to insert a fifth element into an ArrayList we will be left with an Arraylist with 5 values and 3 empty slots. So we can have 3 more insertions before we must double again to accommodate a ninth value. Now the doubling with take place again to increase the Arraylist size from 8 -> 16. Just like before we have all the previous values plus the new insertion. The Arraylist now has 9 values and 7 empty slots. So every time the Arraylist doubles, the frequency of the doubling action is decreased by half??? (If the table is doubled in size every time it is needed, then the resizing operation occurs with exponentially decreasing frequency.)

So in this type of situation we say that the insertion operation has O(1) amortized run time because the time required to insert an element is O(1) on average, even though some elements trigger a lengthy rehashing of all the elements of the hash table. Basically, those infrequent instances (with an O(n) run time) where we must resize the table and then perform an insertion for all the values in the array does not change the average run time of O(1). So those infrequent run times from resizing are negligible but are considered by us stating that the insertion operation has an Amortized run time of O(1).

It is crucial that the array size grow geometrically (doubling). It might be tempting to grow the array by a fixed increment (e.g., 100 elements at time), but this results in asymptotic linear rather than constant amortized running time.

So then, for this particular situation We need a concept that takes both scenarios into account. This is what amortized time does. It allows us to describe that, yes, this worst case happens every once in a while. But once it happens, it won’t happen again for so long that the cost is “amortized”(So this is a more realistic calculation for the complexity of an algorithm?? Not a calculation for the absolute worst complexity possible for any operation).

The Different Methods We Can Use to Calculate Amortized Time

There are 3 main methods used for Amortized Analysis:

- The Aggregate Method, where the total running time for a sequence of operations is analyzed.

- The Accounting, / Banker’s Method, where we impose an extra charge or on inexpensive operations and use it to pay for expensive operations later on.

- The Potential / Physicist’s Method, in which we derive a potential function characterizing the amount of extra work we can do in each step. This potential either increase or decreases with each successive operation, but cannot be negative.

Conclusion

That’s all for today. If you are interested in the MIT course you can check out the video lecture I’m currently going through. The lecture is helpful but isn’t sufficient by itself. Anyways, until next time PEACE!